Welcome to Daily Zaps, your regularly-scheduled dose of AI news ⚡

Here’s what we got for ya today:

🗣️ How ChatGPT voices were chosen

🕷️ Google's AI search struggles

🇨🇭 OpenAI’s “new” safety team

📵 AI “kill switch”

Let’s get right into it!

STARTUPS

How ChatGPT voices were chosen

OpenAI provided an update on the timeline and milestones related to its AI voice assistant, Sky. CEO Sam Altman clarified that Sky's voice was not Scarlett Johansson's and was never intended to resemble hers. Despite this, OpenAI paused using Sky's voice out of respect for Johansson.

The voice actors for ChatGPT's features, introduced in September 2023, were carefully selected through a five-month process involving professional casting directors and extensive auditions. Each actor was compensated above market rates. OpenAI emphasized that the voices were created without imitating any celebrity. The company is now addressing Johansson's concerns and plans to add new voices to better cater to users' preferences.

BIG TECH

Google's AI search struggles

Google unveiled its new AI search feature, AI Overview, designed to answer complex questions by combining statements from language models with live web links. However, the feature has faced backlash for generating erroneous and dangerous advice, such as recommending glue for pizza sauce and rocks for nutrients.

These errors have undermined trust in Google Search, which serves over two billion users. Despite previous issues with AI implementations like Bard, Google continues to push AI integration to compete with rivals Microsoft and OpenAI. Google claims most AI Overview queries yield high-quality results and will use problematic examples to refine the system.

Join the live session: automate compliance & streamline security reviews

Whether you’re starting or scaling your company’s security program, demonstrating top-notch security practices and establishing trust is more important than ever.

Vanta automates compliance for SOC 2, ISO 27001, and more, saving you time and money — while helping you build customer trust.

And, you can streamline security reviews by automating questionnaires and demonstrating your security posture with a customer-facing Trust Center, all powered by Vanta AI.

STARTUPS

OpenAI’s “new” safety team

OpenAI is forming a new safety team led by CEO Sam Altman and board members Adam D’Angelo and Nicole Seligman. This team will recommend safety and security measures for OpenAI's projects. Its first task is to evaluate and improve OpenAI’s current processes and safeguards, with findings presented to the board for implementation.

This move comes after key AI researchers, including co-founder Ilya Sutskever and Jan Leike, left the company citing safety concerns. OpenAI dissolved its Superalignment team, previously focused on AI safety, following these departures. Additionally, OpenAI introduced a new AI model and the voice feature "Sky," which controversially resembles Scarlett Johansson. The new safety team includes other key members, but concerns remain about whether it addresses former employees' issues.

GLOBAL POLICY

AI “kill switch”

Last week, major tech companies, including Microsoft, Amazon, and OpenAI, along with governments from the U.S., China, Canada, the U.K., France, South Korea, and the UAE, made a landmark agreement on AI safety at the Seoul AI Safety Summit. They pledged voluntary commitments to ensure the safe development of advanced AI models. These commitments include publishing safety frameworks to prevent misuse, setting "red lines" for intolerable risks like cyberattacks and bioweapons, and implementing a "kill switch" to halt AI development if risks cannot be mitigated.

U.K. Prime Minister Rishi Sunak highlighted the global consensus on AI safety commitments. This pact builds on previous commitments made in November and applies specifically to frontier AI models, such as OpenAI's GPT. The agreement aims to enhance transparency and accountability, with input from trusted actors, ahead of the next AI summit in France in 2025.

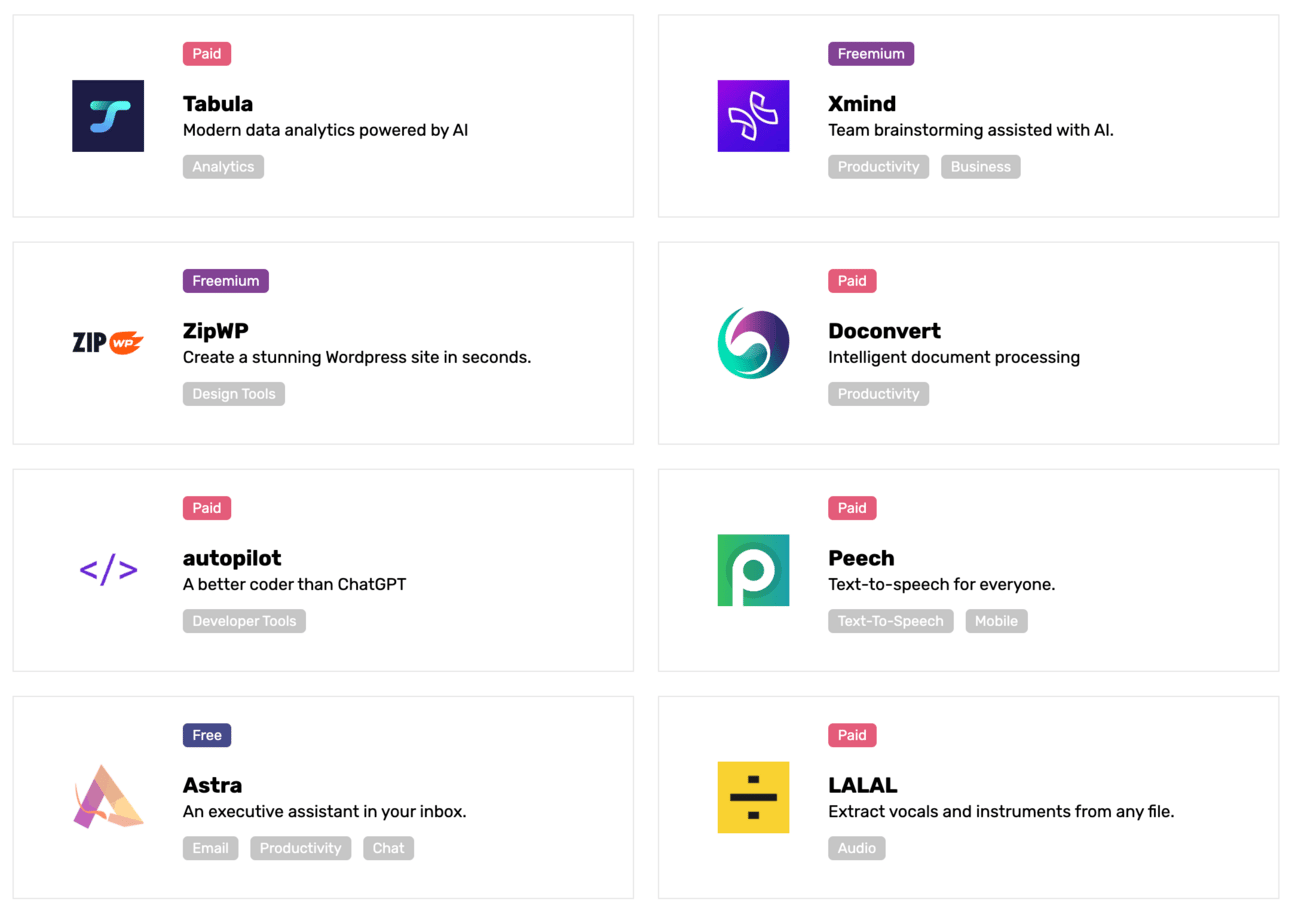

In case you’re interested — we’ve got hundreds of cool AI tools listed over at the Daily Zaps Tool Hub.

If you have any cool tools to share, feel free to submit them or get in touch with us by replying to this email.

🕸 Tech tidbits from around the web

🗣️ Feedback

How much did you enjoy this email?

/cdn.vox-cdn.com/uploads/chorus_asset/file/24390408/STK149_AI_01.jpg)